Experiment and Data Logging of the Gyro Sensor

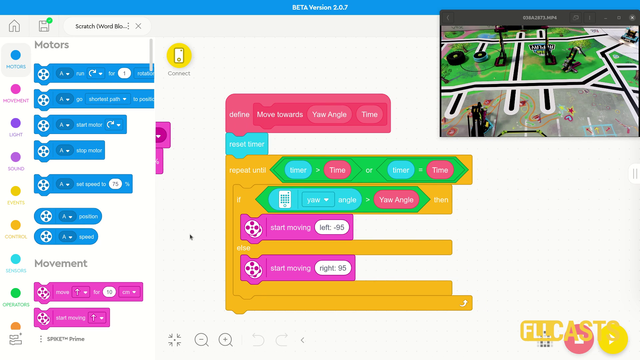

Let's record the values of the Gyro Sensor while the robot is moving and is trying to keep its orientation straight. This is an interesting experiment and we will have to use file access to write the values to a file.

- #662

- 10 Jan 2018